npm install @hono/clicreate-*コマンドではないhonoコマンドhono --help# Show help

hono --help

# Display documentation

hono docs

# Search documentation

hono search middleware

# Send request to Hono app

hono request

# Start server

hono serve

# Generate an optimized Hono app

hono optimizehono docshono docs [path]

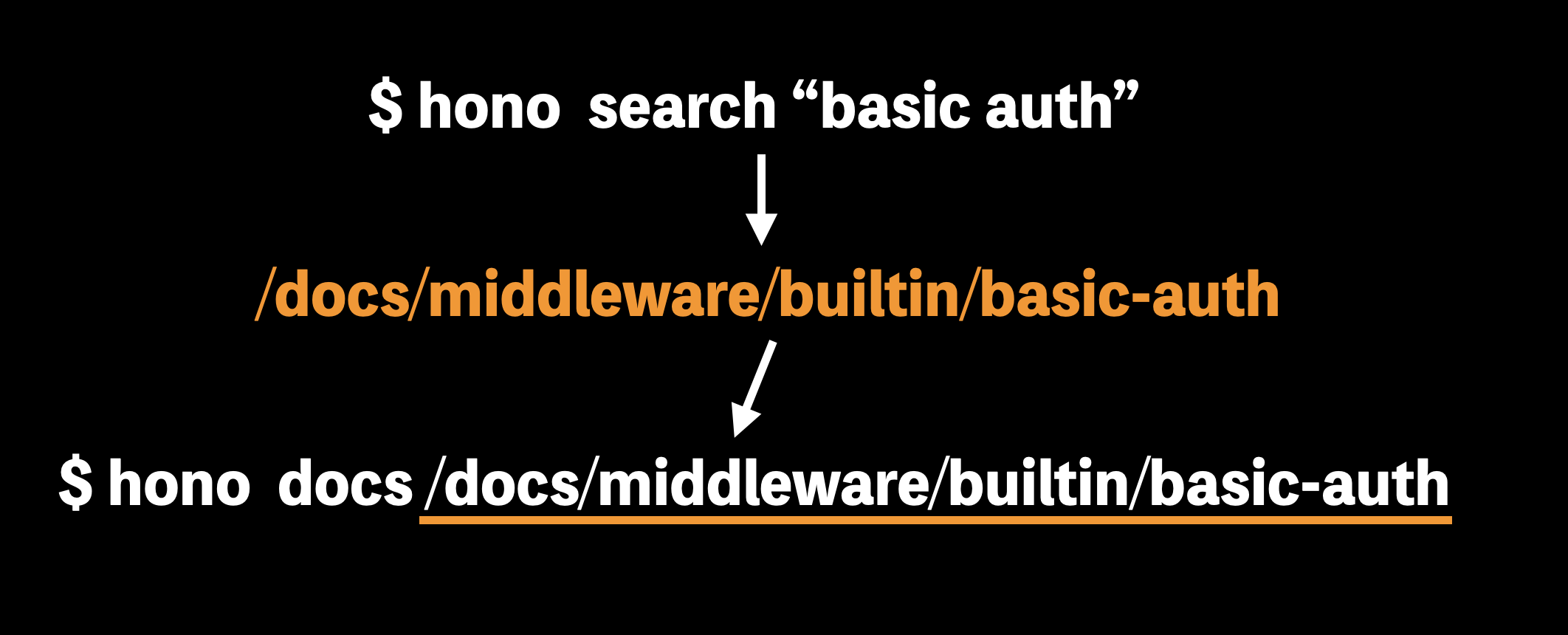

hono docs /docs/api/contexthono searchhono search <query>

hono search middleware

hono requesthono request [file]

hono request src/index.tshono request \

-P /api/usrs \

-X POST \

-d '{"name":"Alice"}' \

src/index.tswrangler dev

curl http://localhost:8787hono requesthono search - ドキュメントを検索hono docs - ドキュメントを読むhono request - アプリをテストする### Workflow

1. Search documentation: `hono search <query>`

2. Read relevant docs: `hono docs [path]`

3. Test implementation: `hono request [file]`

hono servehono serve src/index.tshttp://localhost:7070 でサーバーが立ち上げる

--use オプションhono serve \

--use "logger()" \

src/inidex.tshono serve \

--use "logger" \

--use "basicAuth({username:'foo',password:'bar'})" \

src/index.tshono serve \

--use "serveStatic({root:'./'})"hono serve \

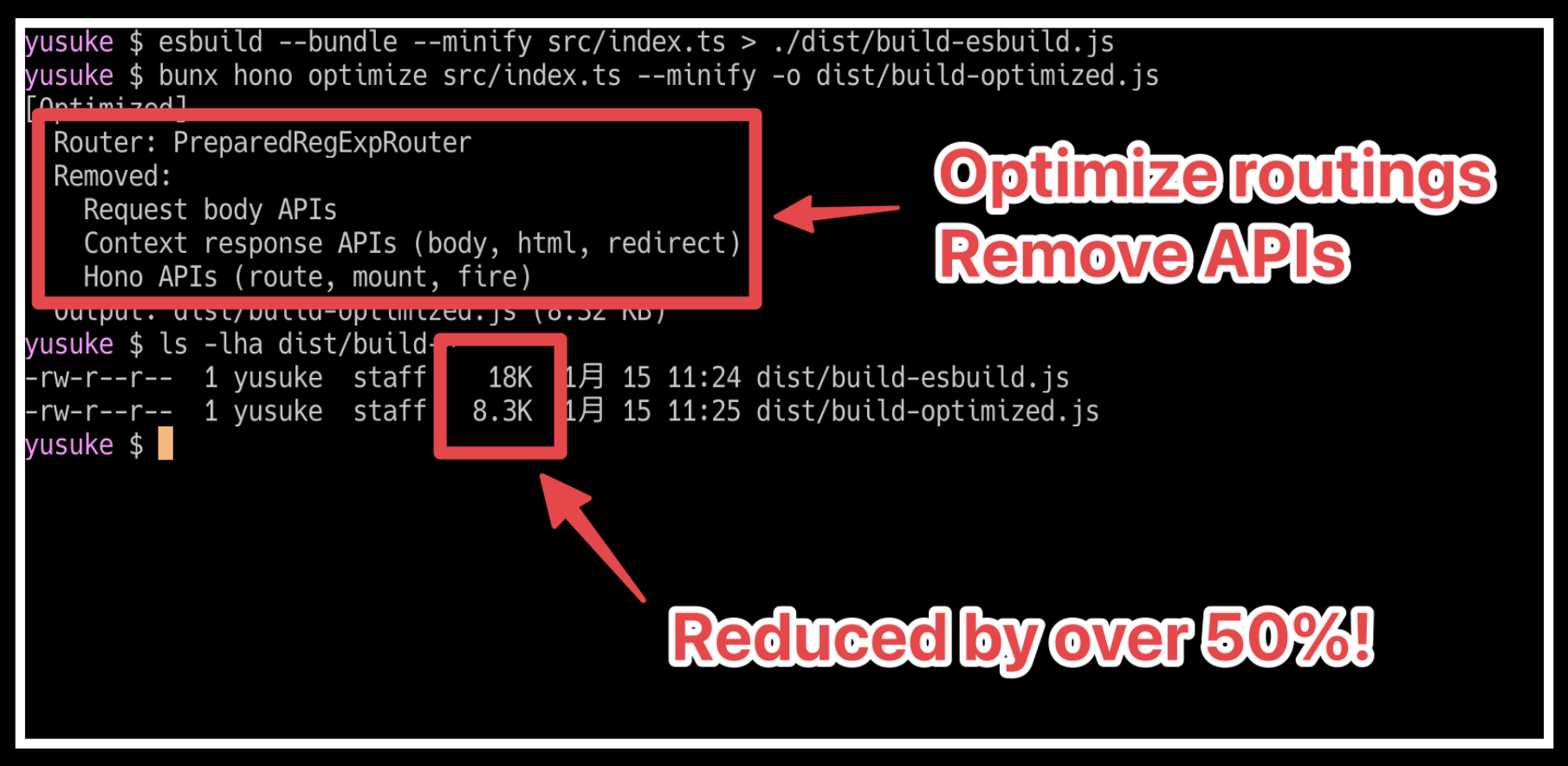

--use '(c) => proxy(`https://ramen-api.dev${new URL(c.req.url).pathname}`)'hono optimizehono optimize [entry]Honoを作ってくれる => ファイルサイズの削減route / mount / firec.body / c.json / c.html / c.text …c.req.json() / c.req.formData()…import { Hono } from 'hono'

const app = new Hono()

app.get('/', async (c) => {

return c.json({ message: 'Hello' })

})

app.get('/health', (c) => c.text('OK'))

export default app

5つのサブコマンド

# Show help

hono --help

# Display documentation

hono docs

# Search documentation

hono search

# Send request to Hono app

hono request

# Start server

hono serve

# Generate an optimized Hono app

hono optimizehono requestと同機能をCloudflare Workers向けに切り出したもの# GET request (auto-detects wrangler.json, wrangler.jsonc, or wrangler.toml)

workers-fetch

# With path

workers-fetch /api/users

# POST request

workers-fetch -X POST -H "Content-Type:application/json" -d '{"name":"test"}' /api/users

# Custom config file

workers-fetch -c wrangler.toml /api/test

# With timeout (5 seconds)

workers-fetch --timeout 5 /api/slowwrangler run?

https://x.com/yusukebe/status/2011974518026485767/quotes

CloudflareのSkill:

@modelcontextprotocol/hono - hono@modelcontextprotocol/node - @hono/node-server

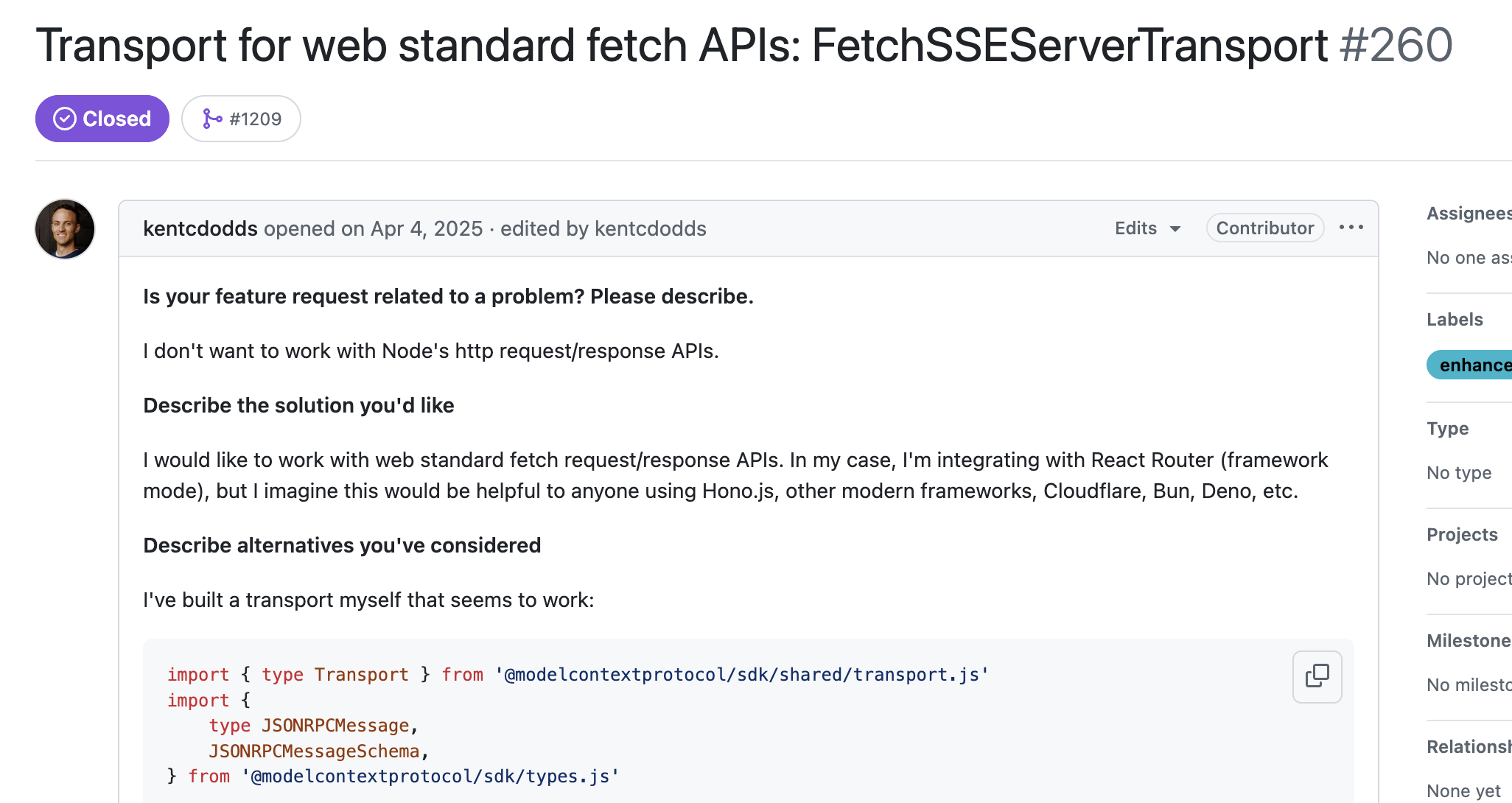

https://github.com/modelcontextprotocol/typescript-sdk/issues/260

const transport = new WebStandardStreamableHTTPServerTransport()

app.all('/mcp', async (c) => {

return transport.handleRequest(c.req.raw)

})@modelcontextprotocol/honoc.get('parsedBody') で使えるようにimport { McpServer, WebStandardStreamableHTTPServerTransport } from '@modelcontextprotocol/server'

import { createMcpHonoApp } from '@modelcontextprotocol/hono'

const server = new McpServer({ name: 'my-server', version: '1.0.0' })

const transport = new WebStandardStreamableHTTPServerTransport({ sessionIdGenerator: undefined })

await server.connect(transport)

const app = createMcpHonoApp()

app.all('/mcp', (c) => {

return transport.handleRequest(c.req.raw, { parsedBody: c.get('parsedBody') })

})@modelcontextprotocol/nodegetRequestListenerはNode.jsのreq / resを受け取る。reqをWeb StandardsのRequestに変換。ハンドラに渡す。返却されたResponseをresに変換する。// https://github.com/modelcontextprotocol/typescript-sdk/blob/main/packages/middleware/node/src/streamableHttp.ts

import { getRequestListener } from '@hono/node-server';

//...

async handleRequest (

req: IncomingMessage & { auth?: AuthInfo },

res: ServerResponse,

parsedBody?: unknown

): Promise<void> {

// Store context for this request to pass through auth and parsedBody

// We need to intercept the request creation to attach this context

const authInfo = req.auth

// Create a custom handler that includes our context

// overrideGlobalObjects: false prevents Hono from overwriting global Response, which would

// break frameworks like Next.js whose response classes extend the native Response

const handler = getRequestListener(

async (webRequest: Request) => {

return this._webStandardTransport.handleRequest(webRequest, {

authInfo,

parsedBody

})

},

{ overrideGlobalObjects: false }

)

// Delegate to the request listener which handles all the Node.js <-> Web Standard conversion

// including proper SSE streaming support

await handler(req, res)

}agents/mcpを使う@hono/mcpを使う@hono/mcpimport { McpServer } from '@modelcontextprotocol/sdk/server/mcp.js'

import { StreamableHTTPTransport } from '@hono/mcp'

import { Hono } from 'hono'

import { mcpServer } from './mcp-server'

const app = new Hono()

// Initialize the transport

const transport = new StreamableHTTPTransport()

app.all('/mcp', async (c) => {

if (!mcpServer.isConnected()) {

// Connect the mcp with the transport

await mcpServer.connect(transport)

}

return transport.handleRequest(c)

})pkg.pr.newのバージョンを使う:

bun add https://pkg.pr.new/modelcontextprotocol/typescript-sdk/@modelcontextprotocol/server@1326mcp-server.ts:

// mcp-server.ts

import { McpServer } from '@modelcontextprotocol/server'

import * as z from 'zod/v4'

export const mcpServer = new McpServer({

name: 'simple-server',

version: '0.0.1'

})

mcpServer.registerTool(

'add',

{

title: 'Add a to b',

inputSchema: { a: z.number(), b: z.number() }

},

async ({ a, b }) => {

return {

content: [{ type: 'text', text: `${a + b}` }]

}

}

)index.ts:

// index.ts

import { Hono } from 'hono'

import { WebStandardStreamableHTTPServerTransport } from '@modelcontextprotocol/server'

import { mcpServer } from './mcp-server'

const app = new Hono()

const transport = new WebStandardStreamableHTTPServerTransport()

app.all('/mcp', async (c) => {

if (!mcpServer.isConnected()) {

await mcpServer.connect(transport)

}

return transport.handleRequest(c.req.raw)

})

export default appインスペクタ:

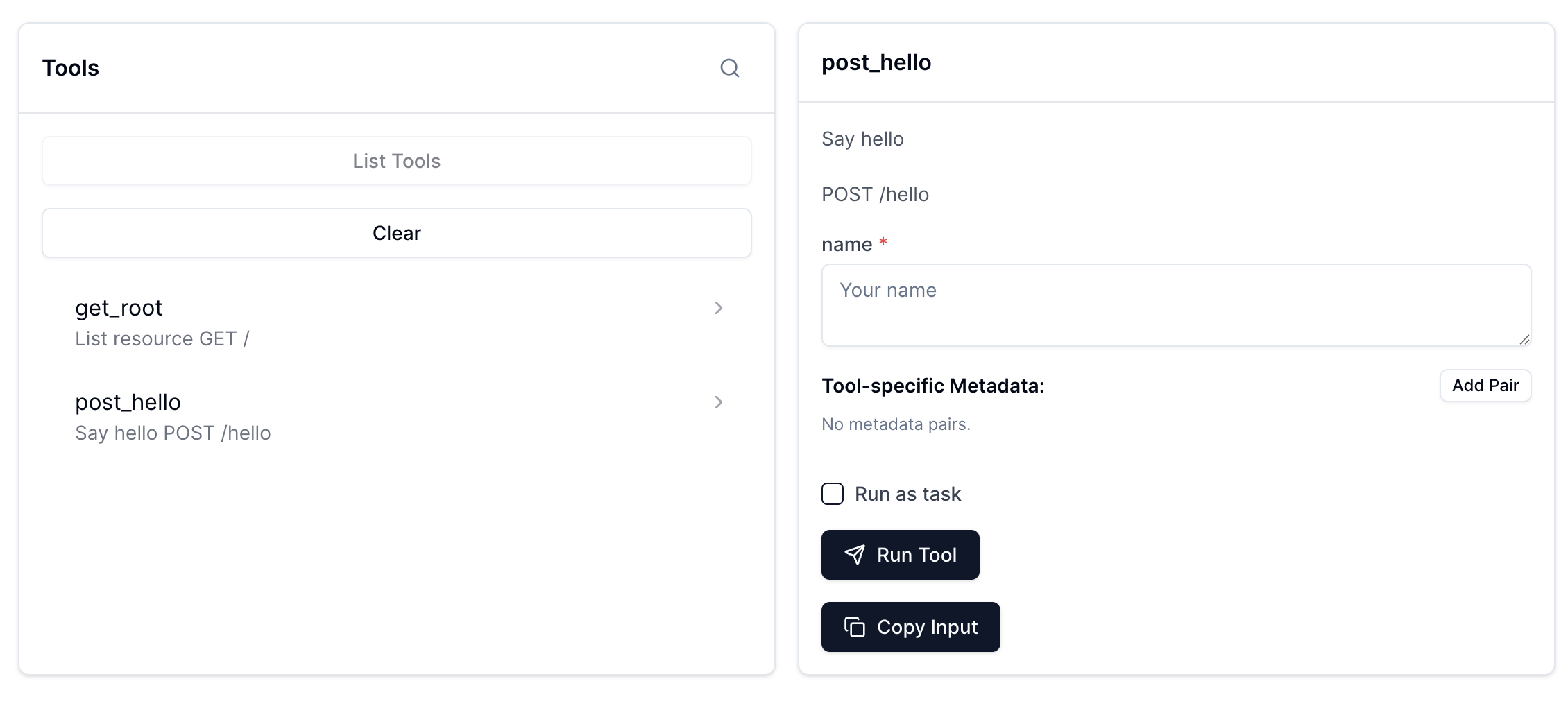

DANGEROUSLY_OMIT_AUTH=true npx @modelcontextprotocol/inspectorimport { Hono } from 'hono'

import { z } from 'zod'

import { mcp, registerTool } from 'hono-mcp-server'

const app = new Hono()

app.get('/', (c) => {

return c.json({ message: 'Hello, MCP Server!' })

})

app.post(

'/hello',

registerTool({

description: 'Say hello',

inputSchema: {

name: z.string().describe('Your name')

}

}),

(c) => {

const { name } = c.req.valid('json') // Typed!

return c.json({ message: `Hello ${name}!` })

}

)

export default mcp(app, {

name: 'Simple MCP',

version: '1.0.0'

})

import { Hono } from 'hono'

const app = new Hono<{

Bindings: CloudflareBindings

}>()

app.get('/', async (c) => {

const stream = await c.env.AI.run('@cf/meta/llama-3.3-70b-instruct-fp8-fast', {

messages: [

{ role: 'system', content: 'You are a ramen master' },

{

role: 'user',

content: 'What is the tonkotsu ramen?'

}

],

stream: true

})

return c.body(stream, 200, {

'Content-Type': 'text/event-stream'

})

})

export default appクライアントの実装:

import { stream } from 'fetch-event-stream'

import { stdout } from 'node:process'

const events = await stream('http://localhost:8787')

for await (let event of events) {

if (event.data) {

try {

const data = JSON.parse(event.data)

stdout.write(data.response)

} catch {}

}

}import { Hono } from 'hono'

import Replicate from 'replicate'

const app = new Hono()

app.get('/', async (c) => {

const replicate = new Replicate()

const output = await replicate.run('google/imagen-4', {

input: {

prompt: 'A mascot of Hono framework flying in the sky'

}

})

return c.body(output as ReadableStream)

})

export default appCreate-honoを使い、cloudflare-workers+viteを選ぶ:

bun create hono@latest my-chatwrangler.jsoncのBindingsにAIを足す:

{

"$schema": "node_modules/wrangler/config-schema.json",

"name": "my-chat",

"compatibility_date": "2025-08-03",

"main": "./src/index.tsx",

"ai": {

"binding": "AI",

"remote": true

}

}型定義を書き出す:

bun run cf-typegenジェネリクスに渡す:

// src/index.tsx

const app = new Hono<{ Bindings: CloudflareBindings }>()Workers AIのLLMを叩いてストリームを返す:

// src/index.tsx

app.get('/stream', async (c) => {

const stream = await c.env.AI.run('@cf/meta/llama-3.3-70b-instruct-fp8-fast', {

messages: [{ role: 'user', content: 'Hello!' }],

stream: true

})

return c.body(stream, 200, {

'Content-Type': 'text/event-stream'

})

})messagesを受け取るためのスキーマ定義:

// src/index.tsx

import * as z from 'zod'

// ...

const schema = z.object({

messages: z.array(

z.object({

role: z.enum(['system', 'user', 'assistant']),

content: z.string()

})

)

})POSTで受け取り、バリデーションする:

// src/index.tsx

import { zValidator } from '@hono/zod-validator'

// ...

app.post('/stream', zValidator('json', schema), async (c) => {

const data = c.req.valid('json')

const stream = await c.env.AI.run('@cf/meta/llama-3.3-70b-instruct-fp8-fast', {

messages: data.messages,

stream: true

})

return c.body(stream, 200, {

'Content-Type': 'text/event-stream'

})

})トップページをSSRで作る:

// src/index.tsx

app.get('/', (c) => {

return c.render(

<div id="chat-container">

<div id="messages"></div>

<form id="chat-form">

<textarea id="input" placeholder="Type a message..." rows={3}></textarea>

<button type="submit">Send</button>

</form>

</div>

)

})vite-ssr-componentsからScriptをインポートしてクライアントファイルを指定:

// src/renderer.tsx

import { jsxRenderer } from 'hono/jsx-renderer'

import { Script, Link, ViteClient } from 'vite-ssr-components/hono'

export const renderer = jsxRenderer(

({ children }) => {

return (

<html>

<head>

<ViteClient />

<Script src="/src/client.ts" />

<Link href="/src/style.css" rel="stylesheet" />

</head>

<body>{children}</body>

</html>

)

},

{ stream: true }

)client.tsを作成する:

// src/client.ts

/// <reference lib="dom" />

/// <reference lib="dom.iterable" />ストリームのパースにfetch-event-streamを使うと便利かも:

// src/client.ts

import { events } from 'fetch-event-stream'あとはAIに任せる。

.

├── app

│ ├── client.ts

│ ├── global.d.ts

│ ├── routes

│ │ ├── _404.tsx

│ │ ├── _error.tsx

│ │ ├── _renderer.tsx

│ │ └── index.mdx

│ ├── server.ts

│ └── style.css

├── package.json

├── public

│ └── favicon.ico

├── tsconfig.json

├── vite.config.ts

└── wrangler.jsonc

Honoを使ってAIをすることについて話してきました。